Continuing Education

About Our Bootcamp

Throughout this comprehensive introduction, students will explore the intricacies of neural networks, delve into model evaluation techniques, learn about optimizers, and grasp the nuances of overfitting and regularization methods. The path is a journey from understanding the basic building blocks of AI to deploying real-world models and grappling with the ethical considerations in AI. This all-encompassing bootcamp is essential because it lays the groundwork for any specialization in the vast field of deep learning, ensuring students are well-prepared to tackle more advanced topics and challenges.

Lit AI

Courses Offered

Model Evaluation Techniques

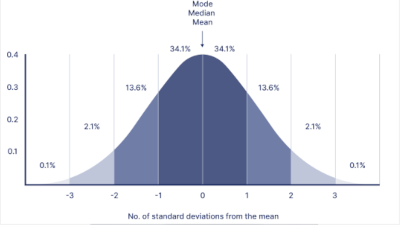

Comprehensive overview of key performance metrics: accuracy, precision, recall, F1 score, and various loss functions. Explanation of training-validation-test splits, cross-validation, and their importance in the evaluation process.High-level discussion about the concepts of bias and variance, underfitting and overfitting, and their implications on model performance.

Learning Rates

Exploration of the concept of learning rates and their role in training neural networks.

Discussion on how different learning rates affect convergence during training.

Hands-on project: students fine-tune the learning rate of a pre-built model to achieve a specified performance goal, and interpret metric charts to understand the impact of learning rate adjustments.

Optimizer

Introduction to various optimization algorithms essential for training neural networks (SGD, Adam, RMSprop, etc.).

Insights into how different optimizers impact the speed and quality of training.

Scenario-based quizzes: students will be presented with different contexts and must choose the most appropriate optimizer.

Activation Functions

Overview of popular activation functions (ReLU, ELU, Sigmoid, TanH, etc.), their graphs, and mathematical interpretations. Discussion on why activation functions are necessary, focusing on non-linearity and the ability to learn complex patterns. Case studies: examining scenarios to determine the best-suited activation functions.

Overfitting, Dropout, and Gaussian Noise

Explanation of overfitting in deep learning and why it’s problematic. Introduction to techniques like dropout and the addition of Gaussian noise as regularization methods. Practical project: students will apply dropout and/or Gaussian noise to a pre-constructed overfitting neural network to improve its generalization.

Building Neural Networks

Fundamentals of designing a neural network architecture from scratch. Guidance on making informed decisions about optimizers, learning rates, activation functions, and regularization techniques. Major project: students create their neural network to solve a given problem, making critical decisions to influence performance outcomes

2-D Data and Convolutional Neurons

Introduction to handling 2D data and the structure and principles behind CNNs. Explanation of convolution, pooling, and classification layers. Comprehensive project: learners build a CNN model, integrating knowledge from previous courses (like learning rates and optimizers).

1-D Data and Recurrent Neurons

Overview of 1D data in sequences and time-series and the basics of RNNs and LSTMs. High-level discussion on the challenges of sequence data and how LSTMs help address some of these issues. Interactive task: students will be tasked with applying an RNN/LSTM to sequence data to achieve a specified objective.

End-to-End Learning Models

Capstone Project: Recap of key learnings from all courses. End-to-end deep learning project, from data preprocessing and model building to evaluation and deployment. Students present their projects, detailing the choices made at each step and reflecting on ethical considerations.

BOOTCAMP

SYLLABUS

COURSE OVERVIEW

This intensive bootcamp is designed to take participants from a foundational understanding of deep learning concepts to a proficient level in building and deploying sophisticated neural network models. Over the duration of the bootcamp, participants will engage with a mix of theoretical content, hands-on projects, real-world case studies, and interactive assessments, all aimed at providing a comprehensive understanding of deep learning. Here’s what to expect:

COURSE CONTENT

PROJECTS

Throughout the bootcamp, participants will undertake multiple projects designed to apply theoretical knowledge in practical scenarios. These include fine-tuning learning rates, choosing optimizers, applying regularization techniques to combat overfitting, building neural networks, and a capstone project that encompasses an end-to-end deep learning model implementation.

WHAT TO EXPECT

Expert Instruction: Courses are designed and led by experts in the field of deep learning, providing in-depth instruction and insight. Interactive Learning: Beyond just theory, participants will engage in practical projects and interactive assessments that reinforce learning and develop practical skills. Resource Access: Access to extensive learning materials, including readings, video content, and real-world case studies. Support: Continuous support and feedback from instructors throughout the course duration. Capstone Project: An all-encompassing final project that requires participants to apply all the skills and knowledge gained throughout the bootcamp.

COMPLETION CRITERIA

Participants are expected to complete all interactive projects, participate in peer reviews, and successfully deliver their capstone project. Performance in these areas will be critical in determining their successful completion of the bootcamp.